According to the github page of ControlNet, “ControlNet is a neural network structure to control diffusion models by adding extra conditions.” You can do quite a few stuff to enhance the generation of your AI images. In this article, I am going to show you how to use ControlNet with Automatic1111 Stable Diffusion Web UI.

[Update 5/12/2023: ControlNet 1.1 is out, please see this new article for updates.]

Enable the Extension

- Click on the Extension tab and then click on Install from URL.

- Enter https://github.com/Mikubill/sd-webui-controlnet in the URL box and click on Install.

- Click on Installed and click on Apply and restart UI.

- ControlNet has quite a few models. However, you do not need to download them all if you only want to use some of them. Here is the models download page.

- Download the control_sd15_openpose.pth file and place it in extensions/sd-webui-controlnet/models folder under the webui folder.

- Restart webui.

Openpose txt2img Example

- We are going to generate one image using ControlNet’s openpose feature to transfer pose from one image to the other. You could use any model with openpose. In this example, I am using BasilticAbyssDream 0.3.

- Click on text2img tab and enter delicate, masterpiece, female cyborg in the positive prompt. Enter the other parameters of your choice

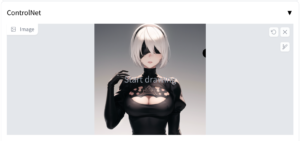

- Expand ControlNet and drag an image to the image area. I am using the image from my previous post.

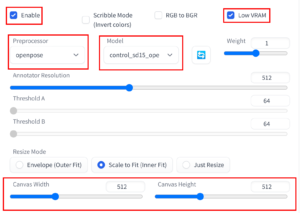

- Check Enable and Low VRAM. Note that the recommendation is that if your GPU has less than 8GB VRAM, then check Low VRAM. My GPU has 12GB VRAM and yet it crashed when I didn’t have Low VRAM checked. Therefore, I am leaving it checked.

- Select openpose from Preprocessor dropdown box and select control_sd15_openpose from the Model dropdown.

- Lastly, set Canvas Width and Cavas Height according to the input image.

- This is the result image. You can see that the pose of the input image has been transferred to the new image.

- Note that there are actually two images generated. The second image is the pose.

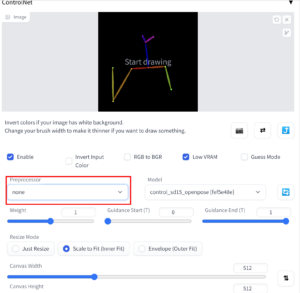

- If you save this pose image, you can speed up the generation process next time you want to use it again. Just drag this image to the image box of ControlNet. Select None in the Preprocessor box.

Openpose img2img Example

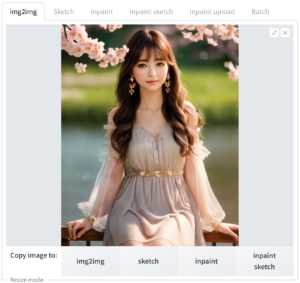

- ControlNet also works with img2img, click on the img2img tab.

- Drag an image of your choice into the Image box. I am using this image:

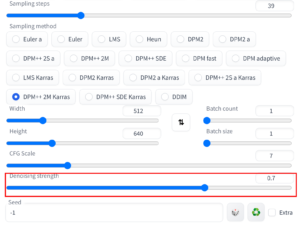

- Enter the parameters as you like. The one that matters most is the denoising strength. 0.7 is the sweet spot for this image. You might have to experiment a bit to find the best value for your image.

- Expand ControlNet and drag an image to the image area. I am using the image from my previous post.

- Check Enable and Low VRAM. Note that the recommendation is that if your GPU has less than 8GB VRAM, then check Low VRAM. My GPU has 12GB VRAM and yet it crashed when I didn’t have Low VRAM checked. Therefore, I am leaving it checked.

- Select openpose from Preprocessor dropdown box and select control_sd15_openpose from the Model dropdown.

- Lastly, set Canvas Width and Cavas Height according to the input image.

- This is the result image. You can see that the pose of the input image has been transferred to the new image.

- Notice that because of the high denoising strength of 0.7, this image is quite different from the original image.

Now that you have learned the basics on how to use ControlNet with Stable Diffusion, try it and let us know what is your favorite model of ControlNet.

My other tutorials:

How to Use LoRA Models with Automatic1111’s Stable Diffusion Web UI

How to Use gif2gif with Automatic1111’s Stable Diffusion Web UI

This post may contain affiliated links. When you click on the link and purchase a product, we receive a small commision to keep us running. Thanks.

How to place pth file in extensions/sd-webui-controlnet/models folder under the webui folde in Kaggle?

I have not tried this yet. However, it would be something like:

!wget -O /kaggle/working/stable-diffusion-webui/extensions/sd-webui-controlnet/models/control_sd15_openpose.pth -L https://huggingface.co/lllyasviel/ControlNet/resolve/main/models/control_sd15_openpose.pth

Is it possible to generate many pictures in different days with the same face of the girl?

Maybe with some extension to the Automatic1111 or other AI generators?

You can train a LoRA model with the girl’s pictures and use the trained LoRA in your prompt.

Thank you for your respond. This option is good for small amount of human characters, but if i have more than ten of thousand different characters is a problematic. My goal is productivity and efficiency